Using LLMs Well#

We’ve previously discussed many of the perils of bring LLMs into your workflow when you are still learning to program. To recap:

LLMs are very good at basic programming. As a result, they can do many of the assignments we are asking you to do.

BUT: LLMs are not as good at the kind of work you will be doing professionally. At the professional level, LLMs require supervision, and you can only provide that supervision if you understand programming principles well enough to follow along. And you can’t learn programming principles without doing those early programming exercises yourself.

In other words, you have to know how to do the things that LLMs can do now so you can develop the skills required to do the things that LLMs can’t do down the road.

But you’re now a little further into your education, and so it’s time to discuss healthy habits for LLM integration. And with that in mind, my Do’s and Don’ts for LLM usage (when writing code):

Don’t Start with the LLM — Course Materials are Better

Do Use LLMs for things that are:

labor intensive to do,

you could do yourself, but which are

easy to verify have been done correctly.

Don’t use LLMs for things you don’t understand and can’t easily verify.

Don’t let LLMs make suggestions in real time.

Do use an LLM that can see your project.

1. Don’t Start with the LLM — Course Materials are Better#

Your instructor, your TAs, the materials you were assigned for a class, and your peers will almost always be better resources for you than LLMs in a reasonably well-designed class. That’s because they understand what you have been taught, where you are in your learning journey, and the learning goal of the assignment you’re working on. None of that information is available to an LLMs.

As a result, we faculty see countless examples of students turning to LLMs when they hit a problem, only to have the LLM leading them way off track. The answers LLMs give students may work, but they’re often convoluted, unnecessarily complicated, and/or use packages or techniques that have not yet been introduced. And the consequence is students may manage to solve the problem in front of them, but the often won’t learn what they’re supposed to learn.

2. Do Use LLMs for things that are#

labor intensive to do,

you could do yourself, but which are

easy to verify have been done correctly.

Tasks that meet these three criteria are what I think of as being right in the wheelhouse of AI. Using AI for things that are easy to do just dulls your skills, while using it for things that are annoying and time-consuming makes you more efficient. Moreover, asking for help with things you could do yourself (maybe with a little google help) means you’re asking for help with something you know even to check over. And finally, tasks that are labor-intensive to do but easily to validate mean you don’t have to overly rely on the AI to do something correctly.

Examples of this type of task include:

Making refinements to matplotlib figures: matplotlib has a thousand and one different methods and ways to tweak plots, so trying to find the exact right one to change a plot in a certain way (e.g., trying to “add text rotated ninety degrees between the figure and the legend”) can take you FOREVER. AI allows you to say what you want in plain English and leave the challenge of figuring out the exact incantation to the LLM. And of course, you can immediately look at the result to see if it worked! Of course, AI won’t always get things exactly right, so understanding at a conceptual level how a library like matplotlib works will allow you to tweak the solution provided by the LLM.

Changing the way that files are named throughout a large project: often when we build big projects, we realize later in the project how it could be better organized. Recently, I had a project where I needed to change the structure of the names of files, such that any file in folders with a certain name needed to have that folder name put in the middle of the file name. I am confident I could use Python to iterate through all the folders in the project, use regular expressions to match the appropriate folders, then restructure the file names with regular expressions. But would it take me forever? Oh yes. So I asked an LLM — it wrote me a Python script I could skim, then I ran the file and was able to then browse through the files to check they looked right. Also, because my project was stored on github, I knew I could easily roll things back if I needed.

If you’re asking AI to help with a task you could do, that also implies it’s a task that you can also supervise.

3. Don’t use LLMs for things you don’t understand and can’t easily verify#

This may be the most important rule for data scientists: it’s easy to ask an AI to write you code to do a certain kind of analysis. But if you don’t understand that code, you can’t be sure it’s doing the thing you want it to do.

Why can’t you just check the answer? Because one of the things that makes data analysis different from software engineering is that you can’t easily validate the code generate the right answer since you’re analyzing the data to generate an answer no one has! When generating new knowledge, the only way to know if your code has given you the right answer is to have confidence in the code itself. Yes, you can (and should!) test your code against toy examples where you know the answer, but for the real data, the true answer is not known.

Thus, if you can’t check the work the LLM did, and you can’t verify its validity easily (as when formatting a plot or renaming files), you really shouldn’t be using LLM.

Now, to be clear, that doesn’t mean you shouldn’t ever us an LLM for something you couldn’t do on your own without help. But if there are parts of the code the LLM generated that you aren’t familiar with, you should make sure you understand them before moving on. In other words, things that are a little beyond you, but close enough that you can understand it when you see it and maybe do a little thinking are good use cases for LLMs.

But don’t use an LLM to write a big block of code you really don’t get and use it if you can’t easily verify its correctness!!

4. Don’t let LLMs make suggestions in real time#

As with so many technologies (looking at you social media), it’s important to also be sure YOU are in control of when and how AI is influencing your thinking.

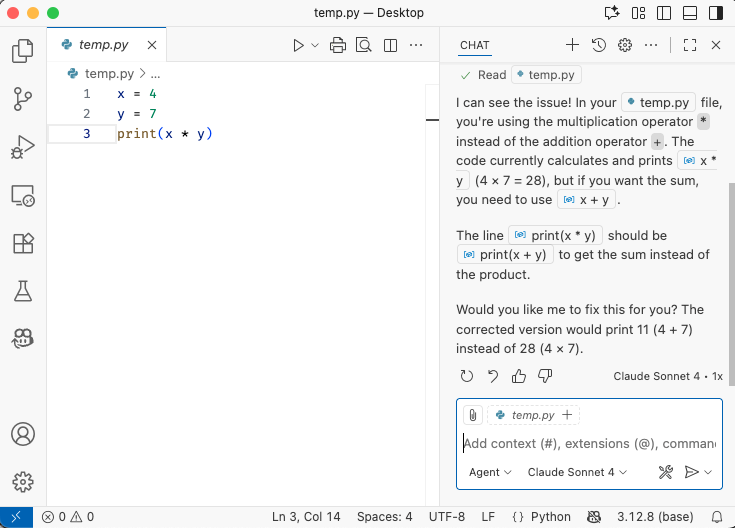

For that reason, I much prefer chat-based LLM coding interfaces to tools that pop up suggests in read time in your browser. In particular, I use the Github Copilot chat interface when I use an LLM for coding. This is a chat window that opens in VS Code beside my code to offer suggestions. The tool can directly modify my code if I ask it to, but it only acts if I ask it to act, and I can easily put it away.

I’ve attached it to a keyboard shortcut so that I don’t have to leave it open to feel like it’s accessible. Instead, it’s almost never in view, and when I decide it would be helpful I invoke it, use it, and then close it.

Tools that offer code suggestions as you type (as greyed code suggestions), by contrast, are my absolute least favorite form of LLMs. The reason is that you can’t help but have your thought process influenced by those LLMs’ suggestions.

“Sure,” you say, “I don’t have to accept the LLM suggestions.” That’s true, but even if you don’t accept an LLM code-completion/recommendation, it is almost impossible to ignore text that pops up right in front of your cursor entirely. And as soon as you engage with that text mentally, it will start to influence how you think about a problem.

5. Do Us an LLM that can see your project#

One of the most important factors for getting good LLM results when coding is ensuring that the LLM can see what you’re working on. Context, in other words, is King.

So when you use an LLM, regardless of the UI, you should ask yourself, “Does the LLM have enough context for effective use?”

That means that if you’re using a chat app running in your browser, make sure to upload the files that are relevant to your question.

Personally, I find the most straightforward way to give an LLM visibility into your work is to use Github Copilot Chat in VS Code. Using Copilot Chat means that the LLM will see all the files you have open or which are visible in your folders tab automatically. Moreover, it is model-agnostic, by which I mean it lets you pick from a range of different LLM models to work on the backend. (You can get Copilot Pro free with a Student Github account, which gives you access to more models than you get for free).

If it’s helpful, I currently use Chat in Agent model (it will put code into your files in response to prompts and ask if you want to keep it) and Claude Sonnet 4.5: